Performance indicators for agricultural economic journals

This blog was written by Robert Finger, Nils Droste, Bartosz Bartkowski and Frederic Ang

We present a new coherent record on the performance indicators (such as acceptance rates, times between submission and first response and impact factors) of ten leading journals in the field of Agricultural Economics and Policy over the period from 2000 to 2020. The good news is that turn-around times have decreased, and impact factors have increased substantially over time. However, the proportion of accepted articles has sharply decreased. Our analysis also reveals large differences across journals in all dimensions.

What is a good journal to publish in? The assessment of the quality of journals in the field of Agricultural Economics and Policy is often based on bibliometric information such as the ‘Impact Factor’, or on subjective reputation rankings. However, there are factors beyond bibliometric indicators that may be relevant for the decision where to submit a manuscript. For example, metrics like the ‘Impact Factor’ may not correspond with expected career impact of publishing in specific journals. Moreover, authors’ journal choices are also driven by a variety of other criteria such as refereeing speed, likelihood of acceptance, turn-around time, and prestige. For journals in the field of Agricultural Economics and Policy, we lack a broad overview on such metrics and information to support authors’ publication choices.

We compiled and analysed the first coherent record on a broad range of performance indicators of ten leading journals in the field of Agricultural Economics and Policy (see Table 1) over the period from 2000 to 2020. To create the dataset used in this paper, we screened hundreds of editor reports, interacted with all journals to validate extracted data and fill data gaps, and harmonised the information. Based on our final dataset, which is available open access, we conduct a wider range of analysis. We provide descriptive plots on developments over time and comparisons across journals. We also provide correlations across different metrics. Finally, we also conduct a data envelopment analysis (DEA) to aggregate the first response time, impact factor and acceptance rate in one holistic score. Our analysis aims to provide transparent information to authors and editors in the field, guiding both groups to better decisions. We also identify conclusions for journals editors and associations in our field.

Table 1. Journal ranking based on six different rankings (e.g. based on survey-based rankings, impact factors and other metrics), see Finger et al. 2022, for details.

| Rank | Journal name | Abbreviation |

| 1 | American Journal of Agricultural Economics | AJAE |

| 2 | Food Policy | FP |

| 3 | Journal of Agricultural Economics | JAE |

| 4 | Agricultural Economics | AE |

| 5 | European Review of Agricultural Economics | ERAE |

| 6 | Australian Journal of Agricultural and Resource Economics | AJARE |

| 7 | Journal of Agricultural and Resource Economics | JARE |

| 8 | Applied Economic Perspectives and Policy | AEPP |

| 9 | Canadian Journal of Agricultural Economics | CJAE |

| 10 | Agribusiness | AB |

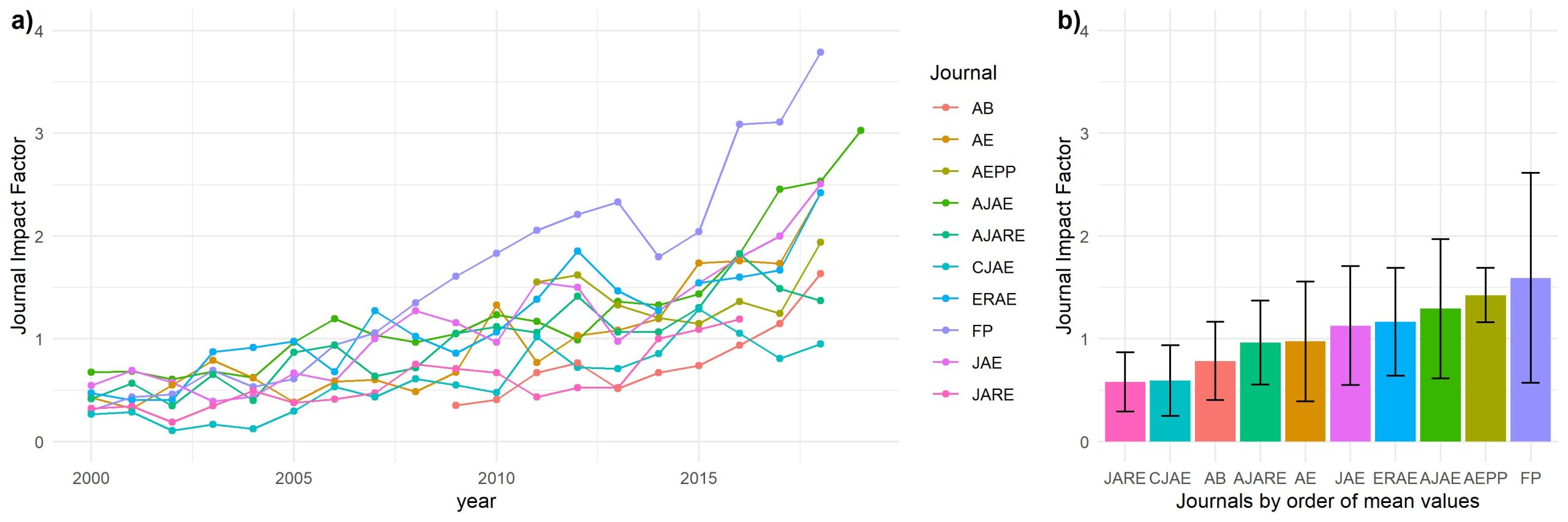

We find that acceptance rates have decreased over time for all journals. For example, acceptance rates at the American Journal of Agricultural Economics dropped from ca. 25% in early 2000s to 11% in 2019. However, differences across journals are substantial (Figure 1, panel b). Reduced acceptance rates also may be partly the result of substantially increased submissions over time. For example, Agricultural Economics had 49 manuscripts with decisions in 2000, which increased to 812 in 2019.

Figure 1: Share of accepted articles a) over time, and b) variance, by journal. Source: own elaboration.

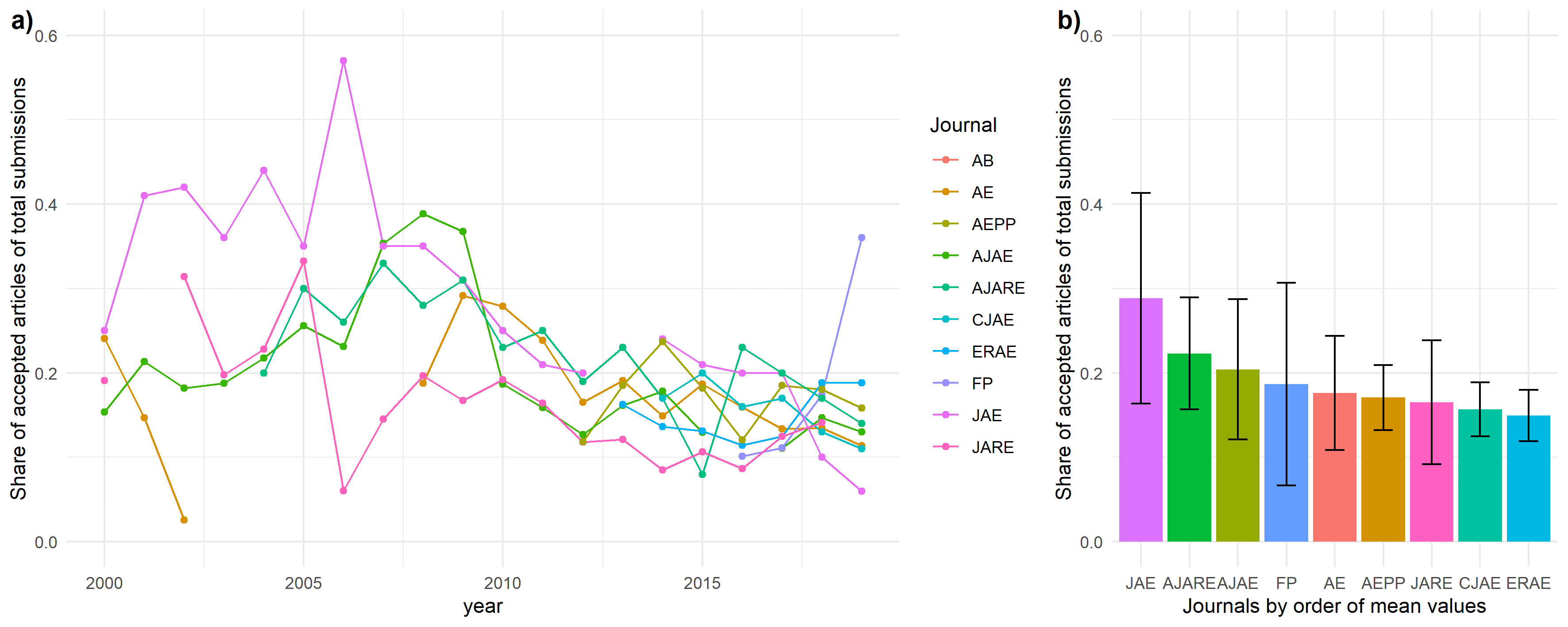

On a more positive note (from the point of view of authors), Figure 2 shows that time spans from submission to first decision (including desk rejections) also have decreased over time in all journals. For example, while the average time from submission to first decision at the Journal of Agricultural Economics was 170 days in 2001, this decreased to 21 days in 2019. Despite this trend, there remain substantial differences across journals. However, the range has decreased substantially across journals. Differences in turn-around time across journals may reflect differences in the efficiency of editorial handling procedures but also may be due to different shares of desk rejections. Regarding the latter, however, we lack sufficient information for inference across journals and years. The decrease in turn-round times over the last 20 years almost certainly reflects the increased use and efficiency of electronic submission systems.

Figure 2: Time to first decision a) over time, and b) variance, by journal. Source: own elaboration.

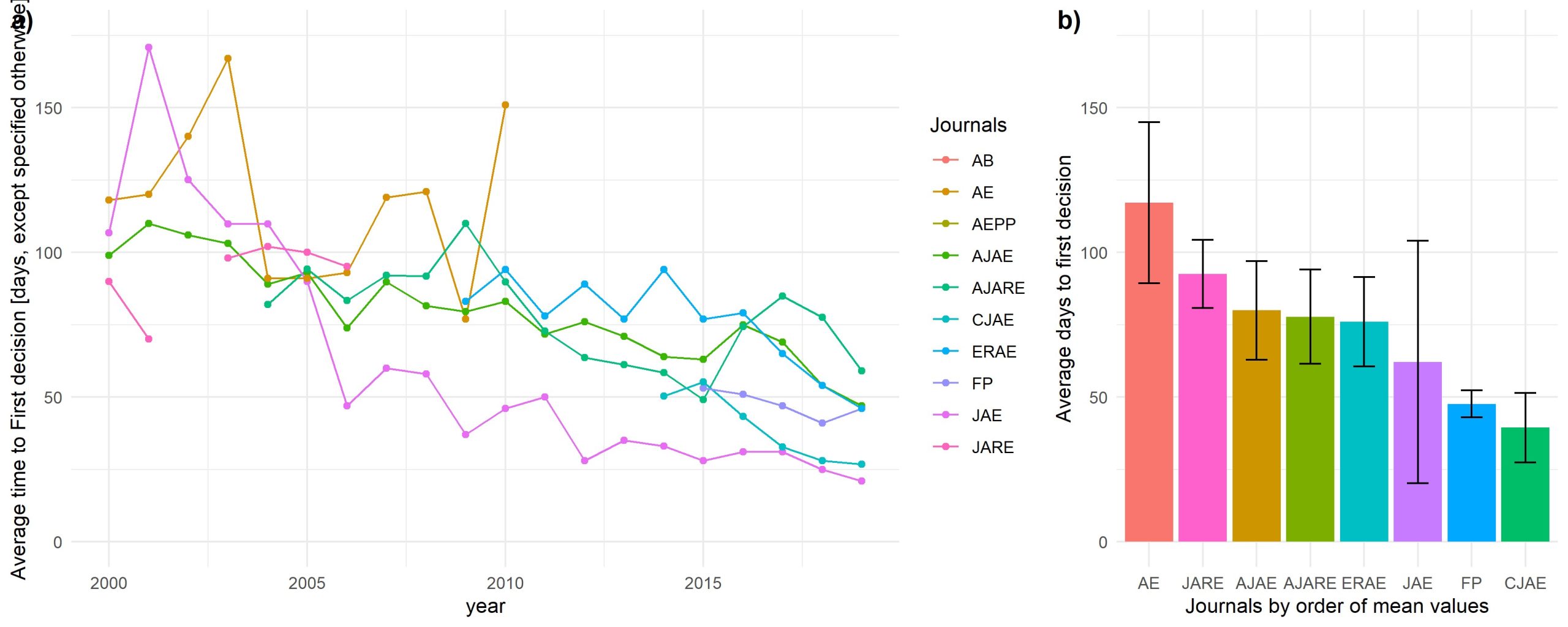

Figure 3 shows that journal impact factors have increased for the considered journals at large, but not for all at the same magnitude. More specifically, FP, AEPP and AJAE have experienced substantial increases in impact factors, while others have remained at rather low levels.

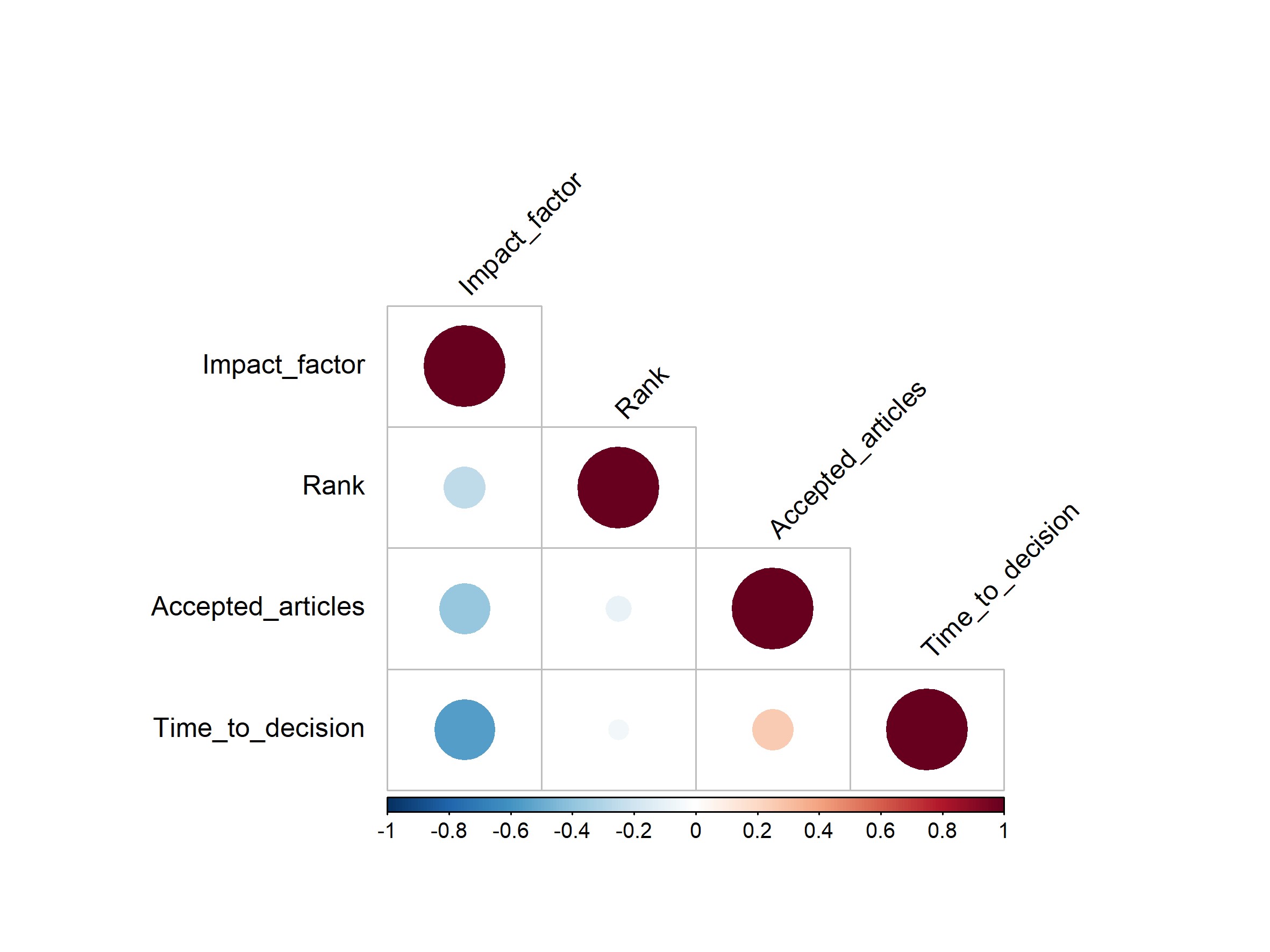

We find clear trade-offs across journals among the different variables. For example, a high share of accepted articles comes with longer times to first response, while a high impact factor is associated with a lower share of accepted articles (correlation: -0.34) but also a longer time to decision (-0.53). Thus, there are no clear-cut sweet spots for authors because quicker decisions may well mean a lower chance of success.

Correlating the ranking used to select journals, i.e. based on six different rankings (see Finger et al 2022), with indicators (turn-around, impact factor, acceptance rate), we find weaker correlations (Figure 4). The ranking correlates negatively and significantly with impact factor (-0.36) and accepted article shares (-0.23), but not significantly with time to decision. Again, there is no sweet spot because climbing the ranks comes along with lower impact factor and fewer accepted articles as well as no clear gain in waiting times. Additionally, if one aims for a journal with a high acceptance rate, this comes on average with more time until a decision. Given these trade-offs, authors need to consider which indicator they value most to optimize their submission choices. There are, however, substantial variations across journals, and specific journal performance may nevertheless suit individual preferences more than others.

Using a DEA, we observe that journals like FP, JAE and AJAE have an excellent score.

Figure 4: Correlation between different indicators, where the direction is color coded, and the absolute size of the coefficients corresponds to circle size. Source: own elaboration.

For authors, our results show there is no free lunch, i.e. authors face trade-offs. For example, submitting to a higher impact factor journal means on average also longer turn-around time and lower acceptance rates. Yet, a low acceptance rate may to some extent also be seen as desirable as it indicates competitiveness, which may signal quality. The dataset presented here gives the first coherent overview of relevant information for authors to guide submission decisions. Our analysis also provides important conclusions for editors and publishers. There are substantial data gaps on rather simple information and the comparability of key indicators is often low (e.g. because calculation of values like acceptance rates differ). Thus, efforts are needed to make data available in coherent standards, comparable across journals. Note that we need journal comparisons based on more than impact factors. Along these lines, other important metrics like share of open access papers, available code, available data, successful replications of papers in the journal, the diversity of authors and reviewers, etc. are not yet available. Agricultural economic associations may also stimulate further surveys etc to synthesise where we are and what authors actually need and value when making submission decision.

Interested in reading more?

Finger, R., Droste, N., Bartkowski, B., Ang, F. (2022). A note on performance indicators for agricultural economic journals. Journal of Agricultural Economics.

This blog post draws heavily from this reference.